Enhancing Generative AI Evaluation with Synthetic Raters

Doug Rosenoff • Location: Theater 5 • Back to Haystack 2025

“In the realm of Generative AI, human Subject Matter Experts (SMEs) are the gold standard for evaluating AI outputs across diverse domains such as medicine, law, and finance. However, the human evaluation process is resource-intensive, both in terms of time and cost. This presentation explores the innovative use of Generative AI-based Synthetic Raters as a cost-effective alternative for evaluating AI-generated content.

A Synthetic Rater is a composite of three elements: a trained Large Language Model (LLM) or similar AI construct, a set of system-level parameters (e.g., prompts), and metadata for identification and versioning. These components mirror those used in human rating processes, allowing for a seamless integration into existing evaluation frameworks. The primary distinction lies in the training and background differences between human and synthetic raters, which can be analyzed using comparison and regression tools within an SME rating framework.

Our research introduces a robust framework for SME-based evaluation that leverages both human and synthetic rater results. We conducted extensive tests using various LLMs and system prompts, comparing synthetic-to-synthetic and human-to-synthetic evaluations across multiple metrics. The findings reveal significant potential for synthetic raters to complement human evaluations, offering diverse perspectives and enhancing overall assessment quality.

This presentation will detail the common metrics employed, the testing methodologies, and the results of our evaluations. We will also explore practical use cases and propose innovative strategies for integrating human and synthetic ratings, ultimately paving the way for more efficient and scalable AI evaluation processes.”

Download the Slides Watch the Video

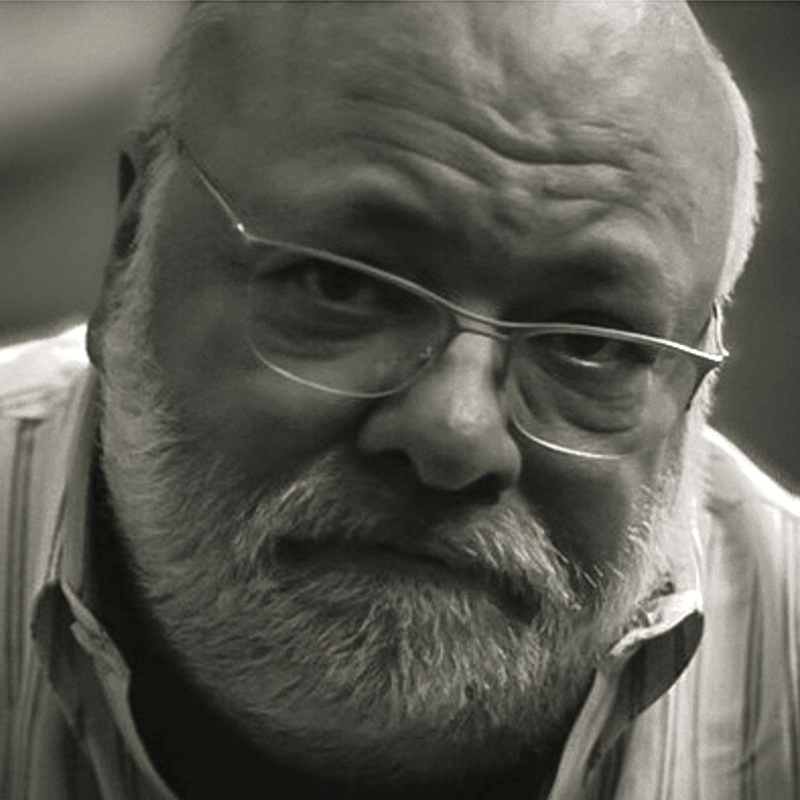

Doug Rosenoff

Lexis NexisFor the past eight years, Doug Rosenoff has been a principal product manager / product owner in the Lexis Nexis Global Services team. He is currently responsible for STF (Search Test Framework) and ABE (A/B Experiments and Controlled Release). Originally a Research Geophysicist specializing in seismic processing methods during the 1980s, he moved into electronic legal research tool development in 1990. He holds multiple domestic and international patents in the areas of automated robust document linking and natural language search algorithms. In his spare time, he enjoys fine art photography, playing with his cats, and gardening.